And All That Hype, Cybersex, In the Movies, Stereoscopic 3D

Speechless!!...

How-To; Teardowns; Tutorials, Stereoscopic 3D

They say you’re not a true 3D enthusiast until you’ve got a shelf full of red/cyan and green/magenta anaglyph 3D glasses. I’m ready to dump mine in the waste bin, but there’s this little problem; two more shelves of anaglyph DVD, BluRay and VHS...

And All That Hype, Game Systems, Head Mounted Displays, Stereoscopic 3D, VR Companies, Where Are They Now?

Sega (all hail Sonic!): 1991 brought the announcement of Sega VR, a $200 headset for the Genesis console, a prototype finally shown at summer CES 1993, and consigned to the trash heap of VR in 1994, before any units shipped. Sega claimed that the helmet experience was...

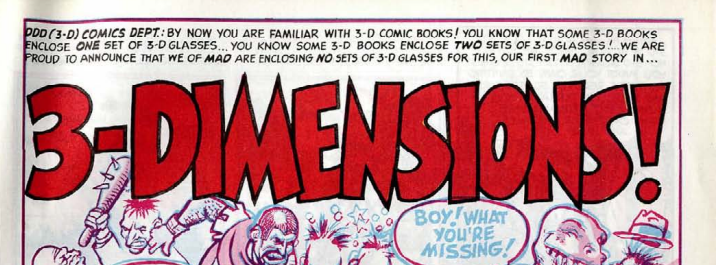

And All That Hype, Stereoscopic 3D

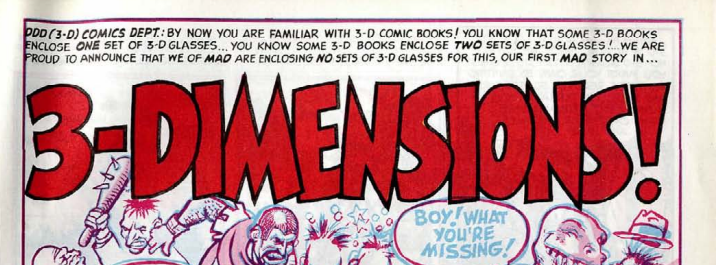

MAD Magazine, June 1954: DDD (3D) COMICS DEPT: By now you are familiar with 3-D Comic Books! You Know that some 3-D books enclose One set of 3-D glasses… You know some 3-D Books enclose Two sets of 3-D glasses! We are proud to announce that we of Mad are...

Around the World, Head Mounted Displays, Stereoscopic 3D, VR Companies

Recently I got my hands on brand new Vuzix video-see-through augmented reality HMD – Wrap 920 AR. It’s not quite a consumer product, it’s more focused on R&D in Augmented Reality field, there are small amount of information about it on the net and few people...

And All That Hype, In the Movies, On TV, Stereoscopic 3D, VR Companies

We all know that the 1950’s were the golden age of 3D movies, Hollywood’s attempt to fend off the rapidly growing television audience. Their 3D thrust was short lived, and with a few exceptions, we enjoyed almost 50 years of 2D bliss. This time around 3D...

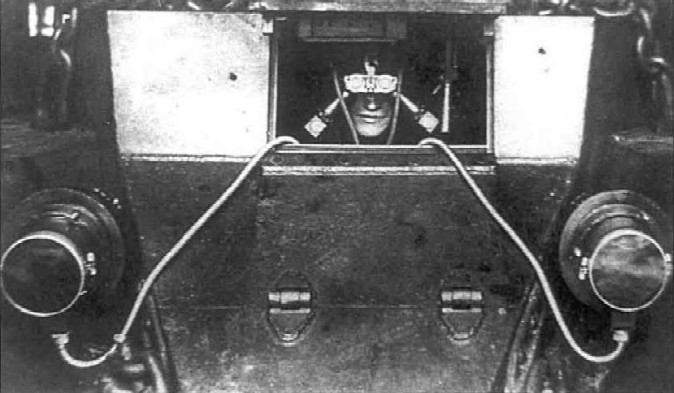

Around the World, Head Mounted Displays, Stereoscopic 3D, Where Are They Now?

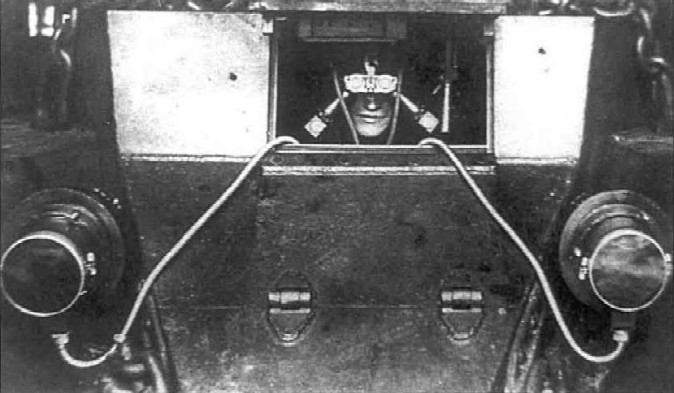

Suddenly, I found the information that USSR army, just before World War 2 developed electronic head-mounted infra-red night-vision goggles for tank crew! It is not exactly a virtual reality subject, but nevertheless it’s early days of electronic HMD’s in...

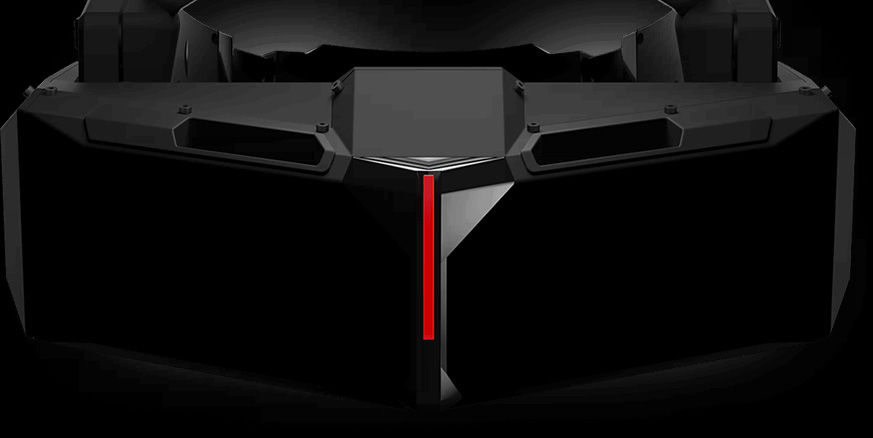

Head Mounted Displays, Stereoscopic 3D

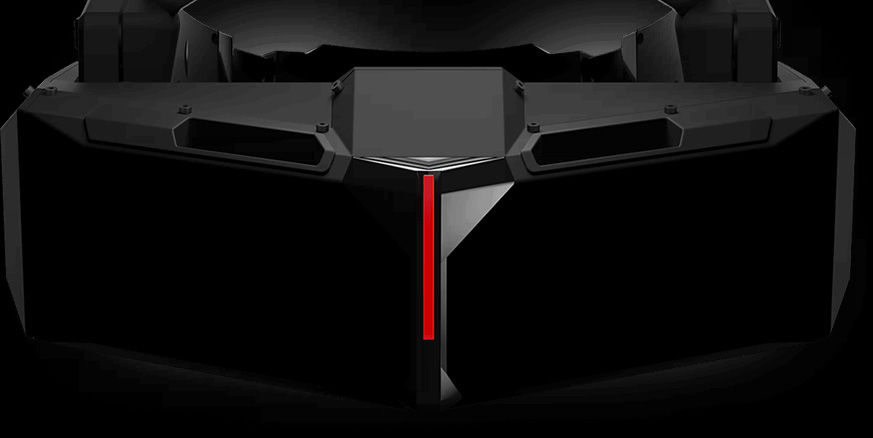

Size matters! If you ask the manufacturers of Head Mounted Displays over the past 15 years, they would echo that mantra, but it’s SMALL size that they’re boasting. Indeed, those tiny little eye glasses size VR displays look cool (from the outside), but...

Head Mounted Displays, How-To; Teardowns; Tutorials, Stereoscopic 3D

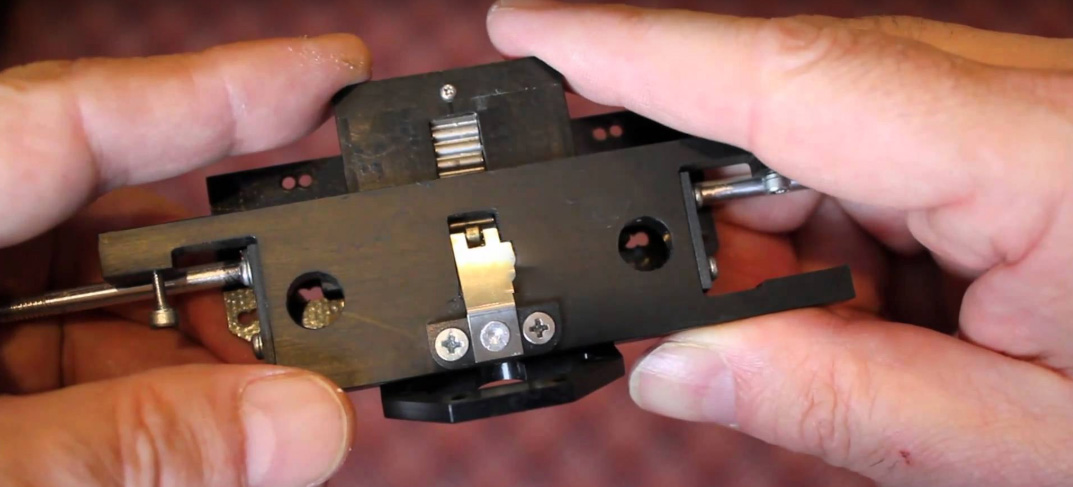

If you look yourself in the eyes, you’ll start to realize that your eyes and your head are different than anyone else’s. The spacing between your eyes, known as the interpupilary distance is about 65mm, but this varies from 50mm to about 75mm, depending on...

And All That Hype, Head Mounted Displays, Stereoscopic 3D

Over at Meant to be Seen 3D, in answer to a forum post looking for the perfect HMD, board vet, cybereality took the time to respond in depth… Money quote: Well, sadly to say it, you will probably be waiting for a long time. There is nothing I know of on the...

Around the World, Head Mounted Displays, Stereoscopic 3D

Who can remember doing all their 3D animation in MS-DOS? Back in the day, there was Gary Yost’s 3D-Studio (not Max!) licensed to and supported by AutoDesk. Now, who remembers creating stereoscopic animation with 3D Studio? VREX had a great little plugin that...

Head Mounted Displays, Stereoscopic 3D, VR Companies

IMHO, the Virtual Research Flight Helmet was, and still is, the ultimate head mounted display, except of course, it needed modern high resolution LCD panels. Otherwise, it had incredible field of view, great ergonomics, and unbeatable LEEP optics. I came across a more...

Around the World, Game Systems, Head Mounted Displays, Stereoscopic 3D, VR Companies

Forte VFX1 was the most advanced, complex and expensive consumer VR system that appeared on the market during VR craze in mid-nineties. Introduced in 1995, VFX1 was in the shops all around the world in 1996. [scrollGallery=id:1;] Hardware overview System consisted of:...

Head Mounted Displays, How-To; Teardowns; Tutorials, Stereoscopic 3D, VR Companies

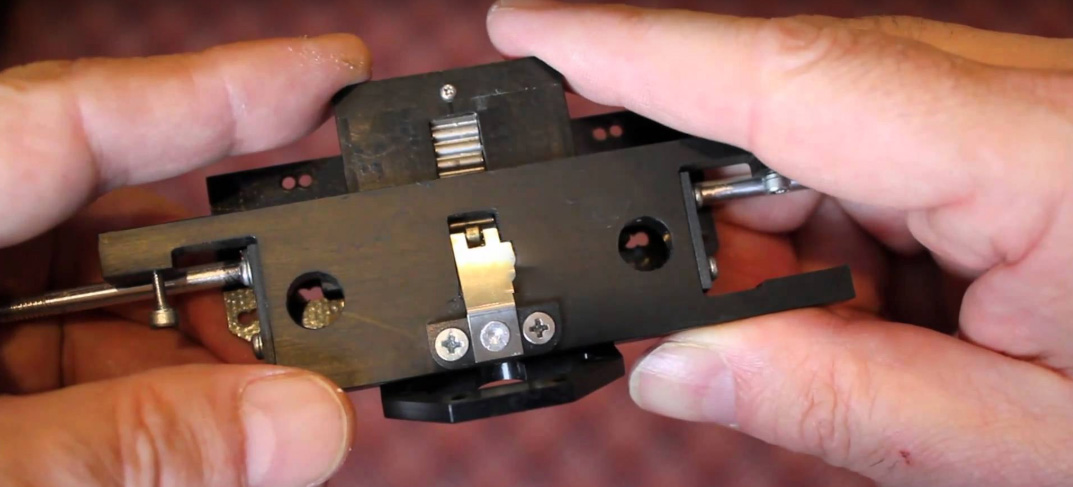

1995 brought us the V6 head mounted display from Virtual Research, the successor to the excellent design of the VR-4. The V6 doubled the overall resolution while retaining the great optics, field of view, comfort, and ease of use originally found in the VR-4. In...

How-To; Teardowns; Tutorials, Stereoscopic 3D

If you’ve been into 3D still photos for a while, no doubt you’ve come to love StereoPhoto Maker, a great (free) Windows based tool for aligning, cropping, correcting and adjusting 3D digital pictures. But when you’re done fooling with the pixels, how...